QCon London 2009

Last March 10-12, I visited the QCon London 2009 conference. Besides feeling very much at home in London (been away too long), I had an excellent time listening to many great speakers and thought-provoking talks. This is an account of my visit and the best sessions I attended.

Mutable Stateful Objects Are The New Spaghetti Code

According to many visitors, the best QCon 2009 talk was Rich Hickey’s: Persistent Data Structures and Managed References. Hickey truly is a gifted speakers who knows how to get a point across. He is the creator of Clojure, a Lisp variant running on the JVM. On Wednesday, Hickey had already talked about Clojure itself, explaining why he had developed Clojure, why it’s running on the JVM (libraries, resource management, type system), and why we’ll more and more be needing functional languages like Clojure. The latter was worked out in more detail in the Persistent Data Structures talk. Hickey said mutable, stateful objects are the new spaghetti code. Mutable stateful objects are objects that don’t always return the same answer to the same question (like “person.getProfession()”). This is bad because it may cause problems in a multithreaded environment, with which we’ll have to deal a lot more often, thanks to today’s multicore processors. Two separate threads might try to change the object’s state at the same time. You might prevent this with locking, but it’s hard to get locking right. Using this argument, Hickey discards the entire object oriented programming model: functional programming FTW! Still he must concede that state remains necessary to develop a useful system, and that you’ll have to be able to change that state as well. His solution to this is Managed References.  To clarify his solution, Hickey uses an example of a match of race-walking. A race-walker must always have on of both feet on the ground. Imagine you’d want to validate this in an OO model. First you request the position of the left feet: it’s off the ground. Next you request the position of the right feet. It’s also off the ground. It must be a foul, no? Well, it depends. As state may change over time, you have to be certain that nothing has changed between those two requests. In the meantime the left foot might have already been put back on the ground. So you really want to make a snapshot of the race on a point of time. You could put a read lock on the object (the runner). But that would freeze the match, which is no more possible than freezing sales to request the state of a particular sales order.

To clarify his solution, Hickey uses an example of a match of race-walking. A race-walker must always have on of both feet on the ground. Imagine you’d want to validate this in an OO model. First you request the position of the left feet: it’s off the ground. Next you request the position of the right feet. It’s also off the ground. It must be a foul, no? Well, it depends. As state may change over time, you have to be certain that nothing has changed between those two requests. In the meantime the left foot might have already been put back on the ground. So you really want to make a snapshot of the race on a point of time. You could put a read lock on the object (the runner). But that would freeze the match, which is no more possible than freezing sales to request the state of a particular sales order.  The solution Clojure offers is to make values within the state inaccessible from the outside, but to refer to them indirectly. If you need to change one or more values within the state, the reference to the old state will be replaced by a reference to the new state, in a single controlled operation. So you don’t change the state itself, you change the reference to the state instead. To safely conduct this change, you use Clojure’s so-called Software Transactional Memory system (STM). This mechanism also allows another thread to safely access the state values at the same time that they are being changed. After all, the other thread is accessing the old state, which in itself is consistent. It’s actually comparable to the way database transactions are being done in Oracle. The STM offers several ways to execute the change, so for instance you could choose to do the change synchronously or asynchronously. It’s an elegant mechanism, which apparently has been written in Java. So you could use it in Java code, although Hickey thinks it’ll look rather weird. I don’t think that should be much of a problem, but I do wonder how you would combine this with ‘real’ persistence, in a database. An object whose state has changed, will want to commit that state to the database at some point. If that database commit is not part of the memory transaction, then what’s the point of the whole operation? At what point in the state’s time line will that state end up in the database? Unfortunately, Hickey had not yet worked on that question. So what about Lisp? I’m sorry, but I’m still not impressed with Lisp, or Clojure for that matter. I have no problem ignoring the parentheses, but it still seems to me that I, as a programmer, must conform to the way of thinking of the compiler/interpreter (because the language is an exact representation of the resulting AST). As consumer of a programming language, I’m not interested in homoiconicity. I prefer an elegant, readable language that allows me to express myself easily; let the compiler/interpreter do some more work if that’s what it takes. We don’t code in assembler either any more, or do we? Sample spelling checker, taken from Hickey’s presentation:

The solution Clojure offers is to make values within the state inaccessible from the outside, but to refer to them indirectly. If you need to change one or more values within the state, the reference to the old state will be replaced by a reference to the new state, in a single controlled operation. So you don’t change the state itself, you change the reference to the state instead. To safely conduct this change, you use Clojure’s so-called Software Transactional Memory system (STM). This mechanism also allows another thread to safely access the state values at the same time that they are being changed. After all, the other thread is accessing the old state, which in itself is consistent. It’s actually comparable to the way database transactions are being done in Oracle. The STM offers several ways to execute the change, so for instance you could choose to do the change synchronously or asynchronously. It’s an elegant mechanism, which apparently has been written in Java. So you could use it in Java code, although Hickey thinks it’ll look rather weird. I don’t think that should be much of a problem, but I do wonder how you would combine this with ‘real’ persistence, in a database. An object whose state has changed, will want to commit that state to the database at some point. If that database commit is not part of the memory transaction, then what’s the point of the whole operation? At what point in the state’s time line will that state end up in the database? Unfortunately, Hickey had not yet worked on that question. So what about Lisp? I’m sorry, but I’m still not impressed with Lisp, or Clojure for that matter. I have no problem ignoring the parentheses, but it still seems to me that I, as a programmer, must conform to the way of thinking of the compiler/interpreter (because the language is an exact representation of the resulting AST). As consumer of a programming language, I’m not interested in homoiconicity. I prefer an elegant, readable language that allows me to express myself easily; let the compiler/interpreter do some more work if that’s what it takes. We don’t code in assembler either any more, or do we? Sample spelling checker, taken from Hickey’s presentation:

; Norvig’s Spelling Corrector in Clojure

; http://en.wikibooks.org/wiki/Clojure_Programming#Examples

(defn words [text] (re-seq #"[a-z]+" (.toLowerCase text)))

(defn train [features]

(reduce (fn [model f] (assoc model f (inc (get model f 1))))

{} features))

(def *nwords* (train (words (slurp "big.txt"))))

(defn edits1 [word]

(let [alphabet "abcdefghijklmnopqrstuvwxyz", n (count word)]

(distinct (concat

(for [i (range n)] (str (subs word 0 i) (subs word (inc i))))

(for [i (range (dec n))]

(str (subs word 0 i) (nth word (inc i)) (nth word i)

(subs word (+ 2 i))))

(for [i (range n) c alphabet] (str (subs word 0 i) c

(subs word (inc i))))

(for [i (range (inc n)) c alphabet] (str (subs word 0 i) c

(subs word i)))))))

(defn known [words nwords] (for [w words :when (nwords w)] w))

(defn known-edits2 [word nwords]

(for [e1 (edits1 word) e2 (edits1 e1) :when (nwords e2)] e2))

(defn correct [word nwords]

(let [candidates (or (known [word] nwords) (known (edits1 word) nwords)

(known-edits2 word nwords) [word])]

(apply max-key #(get nwords % 1) candidates)))

Scala and Lift

Elegance, readability, expressiveness: these are also the reasons why I’m more interested in Scala. There were two Scala sessions at QCon: Jonas Bonér talked about several nifty features in Scala, and David Pollak talked about his creation: the Lift web framework. Or rather, he showed a demo in which he built a chat application in 30 lines of code, based on Comet (push technology for web pages). Cool, but I’m less and less impressed by all those “look what I can do in my framework in only one and a half line of code” type of demos. On the other hand, a decent overview of Lift was missing: what does it do, how does it work, what does it look like? Because in the Lift code samples I’ve seen so far, it’s not by far as easy to use as, let’s say, Rails. What then is the reason for choosing Lift over Rails or Grails or what have you — other than that it’s based on Scala? I thought we had agreed by now that we use the best tool for the job. For web applications that have to be built fast, I don’t see for now why Lift should be that best tool. Jonas Bonér went over a great number of examples of how much more pragmatic Scala can be, compared to Java code. But he also mentioned some more advanced features, like being able to define in a trait default behavior for java.util.Set methods:

trait IgnoreCaseSet

extends java.util.Set[String] {

abstract override def add(e: String) = {

super.add(e.toLowerCase)

}

abstract override def contains(e: String) = {

super.contains(e.toLowerCase)

}

abstract override def remove(e: String) = {

super.remove(e.toLowerCase)

}

}

He also showed a sample in which traits are being used to model all possible aspects of a class:

val order = new Order(customer)

with Entity

with InventoryItemSet

with Invoicable

with PurchaseLimiter

with MailNotifier

with ACL

with Versioned

with Transactional

In this, each trait defines specific attributes for each facet. If you do this well, the traits can be made generic and therefore reusable. It does require a rather different view on how to model your classes. And talking about modeling: how would you model this? You could probably model traits as interfaces or abstract classes in UML. Overall, Scala played a somewhat strange role during the conference. It was mostly in the air. Nobody was explicitly fanatical about Scala; even the speakers doing the Scala talks were most enthusiastically watching Hickey’s Clojure sessions. And of course, in Hickey’s view, Scala must be evil, as it’s still object oriented (besides the functional elements it does contain). On the other hand, more and more people I talk to are convinced that Scala would be the best sequel to Java; and less and less people think the more complex and extensive possibilities of the language will be too high a barrier.

Ioke en Obama

But the multitude of ‘new’ languages already started on Tuesday night, at a prequel to the conference at ThoughtWorks’ London offices. There, Ola Bini spoke for two hours about developing a new language for the JVM. Although his examples were in JRuby and Ioke (the language he developed), I had expected even more about Ioke itself. Instead, we got a rather generic presentation about developing a language. Nevertheless, those two hours went by in a flash.

Besides, I enjoyed the opportunity to look around at ThoughtWorks. The people at ThoughtWorks had somewhat underestimated the number of visitors so there was barely enough room for us all. Still nice to be there.

I skipped Fowler’s talk about three years of Ruby experience at ThoughtWorks. It’s sad, I was really enthusiast when I wrote my first lines of Ruby, and I still think it’s a beautiful language, but I don’t think that Ruby is the best language/tool for the code I most want to write (back end domain logic). So the only time I saw Fowler in action (outside the White Hart pub) was in the presentation of the software used for Obama’s presidential campaign, which he delivered together with Zack Exley. In an inspiring presentation (also considering the time of day, Wednesday afternoon, just before the party) they talked about how ThoughtWorks had integrated existing and new software into a solid running system with which campaign workers and volunteers had been managed. Fowler would not say if this had won the elections for Obama, but it was clear that Obama has made use of the Internet like no candidate before him.

Not All Of A System Will Be Well Designed

Eric Evans, author of Domain-Driven Design, looked back on the five years that had passed since the book came out. One thing he would have wanted to emphasize more was mapping of contexts and context boundaries. Evans considers a domain model to have boundaries based on its context: an entity (like Person) might have a whole other meaning from one domain to the next. Also, he feels that the building blocks for designing a domain model (e.g. Entity, Value Object, Factory, Repository) are often overemphasized. It’s not about the building blocks, it’s about the principles of DDD. I couldn’t agree more. Nevertheless, Evans described a new building block that isn’t mentioned in the book: Domain Events. Domain Events should not be confused with technical events; they’re events in the domain that have meaning to domain experts. A DDD architecture can benefit from using Domain Events, because they offer a way to decouple subsystems. Also they may result in a better performing system, even though people often think otherwise.

In a second session called Strategic Design, Evans discussed the situation where people who want to keep their application neat and clean, according to its architecture and design, often are being surpassed by the fast ‘hackers’ on the team, hacking something together without much regards for the rules — and who are also appreciated most by the business. Why? Because these hackers work in the Core Domain, adding core features that the business needs most. (I remember doing just that on several occasions, and indeed: users love you, fellow coders hate you). The designers/developers/architects that do care about the rules, are busy in the background fitting in and correcting these quick hacks. Something the business doesn’t really care about — if they even notice. So what can be done? Make sure you work in the Core Domain as well. Accept that not all of the system will be well designed. Isolate parts of the system that haven’t been well designed, map their boundaries, put an abstraction layer on top of them. And start building those core features, supported by a small piece of framework on top of these ‘big balls of mud’, the not so well designed systems that you won’t be able to change. The biggest challenge in a setup like that, I would say, is to prevent any new big balls of mud to take shape.

You Can’t Buy Architecture

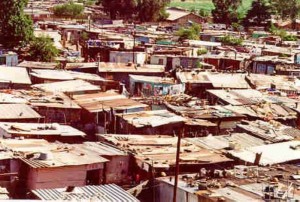

Yet another speaker, Dan North, an architect working at ThoughtWorks, described how he eventually did manage to leave a big ball of mud a little bit better than he found it. He used the metaphore of a Shanty Town. You can’t magically turn it into a neatly laid out suburb, but you can for instance attempt to install running water everywhere. But “you can’t buy architecture”. A running gag during the conference was that you could buy SOA in two colors: red (Oracle) and blue (IBM). Fortunately we know there’s more than that: SOA is a concept, not a box you buy off the shelf but something that you can achieve even with quite simple (and cheap) tools.

North’s idea of an architect is someone who collects stories, someone who’s able to hear out the history of the shanty town, who will understand decisions that have been made, even if he would have done it otherwise. When he’s heard enough, he won’t push through the necessary changes regardless and all at once. It is possible to take smaller steps, use transitional architecture, to get people used to the new ideas and to see how the changes turn out. Dan North comes across as an architect who’s just as concerned with the social aspects of software development as he is with the technical side of things. His last lesson learned: life moves on. Accept that people will make other choices after you’ve left the project. They may make other decisions than you would have done. If, some time after, you maybe get to talk to someone still on the project, you might hear that a theater has been built in the shanty town. Maybe not like you would have built it, but that’s the way it goes.

War On Latency

After my own performance tuning activities at my current client, I was eager to listen to the Architecting for Performance and Scalability panel early Wednesday morning. Well known names like Cameron Purdy (Oracle) and Kirk Pepperdine (independent consultant) and a less well known name like Eoin Woods (architect at a big investment group) discussed all kinds of performance related topics. A discussion like this will easily take off in all directions, leaving several individual sound bites. In all their simplicity they still offer food for thought though. For example:

- The war on latency will become more important than execution profiling.

- If you have to tune a 9 to 5 system, record user actions during the day. You can use them during evening hours to simulate a representative load on the system.

- In order to tune well you have to benchmark. Benchmarking must be seen as a project in itself. And benchmarking might turn out to be more expensive than throwing more hardware to the system.

- If using ‘new’ languages (JRuby, Groovy etc.) causes performance problems, then these problems will be solved. Just like it happened with Java.

- The problem with marshaling XML in a SOA isn’t the time it takes to (de)serialize XML. It’s the time it takes to (de)serialize XML multiple times in the same request.

Cloud Databases

I didn’t see much of the sessions about large web architectures: BBC, Guardian, Twitter. Although no doubt very interesting, this isn’t the kind of architecture I deal with on a daily basis. That in itself might not be a reason not to learn about them, it’s more that there were always talks, scheduled at the same time, that were more in my alley. I did see a talk about cloud databases. Cloud computing, delegating parts of your system to services available on the web (just improvising a definition here), is hot. A cloud database is a database system that is available to you on the web. Instead of using Oracle you put your data in a cloud database. That might seem unthinkable to some, especially if, for instance, you have to meet strict security requirements. On the other hand, many applications don’t have to deal with such requirements, or find another way to deal with them. For those applications, cloud databases offer some attractive advantages. You don’t have to do maintenance or support for them. They can be almost limitless in size. Google’s BigTable (which is not available yet outside Google) is said to contain table 800TB in size, distributed over many servers. But the most interesting aspect of cloud databases is that they’re not (always) relational databases. Instead they often have some kind of cell structure, comparable to that of a spreadsheet. Each attribute value ends up in its own cell. You don’t have to define beforehand which attributes each entity contains. Not every row will necessarily use the same attributes. And the best part is that sometimes time is a separate dimension. If a row’s attribute already has a value, then assigning a new value will not overwrite the old one. The new value is added instead, with a version number or time stamp. Locking becomes a thing of the past. You will always see the latest (at time of retrieval) consistent version of an instance, but you could also request its state at some point of time in the past. The talk about cloud databases presented several implementations currently available, discussing their advantages and disadvantages. To me it became clear that this is just the beginning of the whole concept. There are many different implementations, but most are just ready or not ready yet. There are obvious disadvantages, especially the fact that you often have to use a custom query language instead of SQL. And the cloud database that seems to be the best — Google’s — is not (yet?) available outside Google.

QCon 2010

Looking back, I’m very much impressed by these three days of QCon London 2009. Biggest plus of the conference was its small scale. With just about 400 visitors, you’re likely to run into speakers during breaks, so it’s easy enough to talk to some of them informally. Likewise, sessions are smaller scaled, the conference rooms are smaller, you’re quite close to the speakers. But more importantly: these speakers all proved to be excellent. Subjects that were covered weren’t limited to Java, or to some vendor, but seemed to come directly from day-to-day experiences of professional architects and developers. That made them recognizable and means you can learn from them. And I’ve even had to miss many more interesting — parallel — sessions. My conclusion on the whole: I’m looking forward to next year’s QCon!

2009-03-26. One response.